regularization machine learning mastery

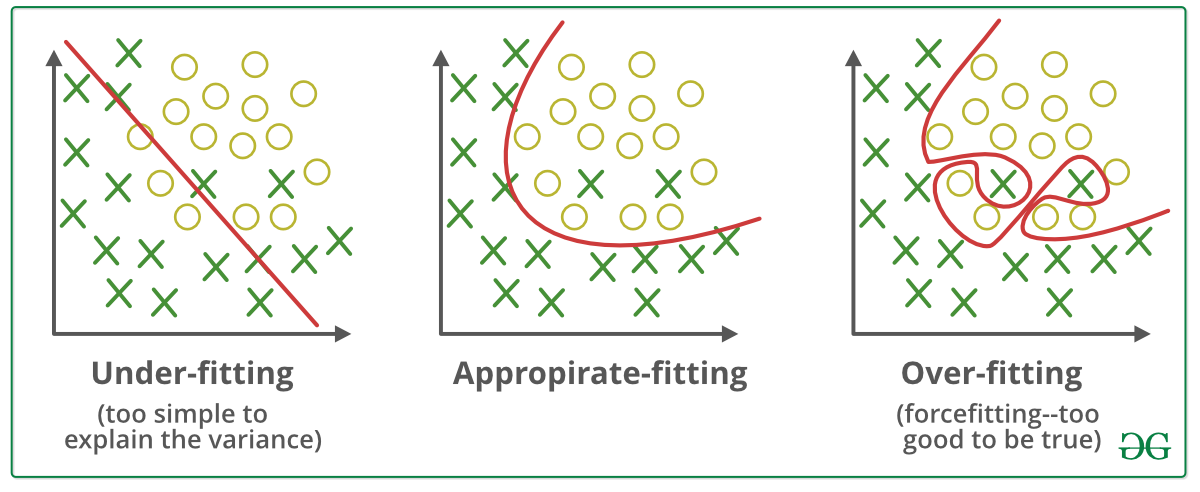

Regularization is the most used technique to penalize complex models in machine learning it is deployed for reducing overfitting or contracting generalization errors by putting network weights small. Regularization is a technique to reduce overfitting in machine learning.

Regularization In Deep Learning Pros And Cons By N N Medium

Welcome to Machine Learning Mastery.

. Data augmentation and early stopping. In this post you will discover the dropout regularization technique and how to. Regularization can be implemented in multiple ways by either modifying the loss function sampling method or the training approach itself.

It means the model is not able to. You should be redirected automatically to target URL. Machine Learning Life Cycle.

Regularization is one of the basic and most important concept in the world of Machine Learning. Regularization is any modification we make to a learning algorithm that is intended to reduce its generalization error but not its training error If. Regularization is any modification we make to a learning algorithm that is intended to reduce its generalization error but not its training error.

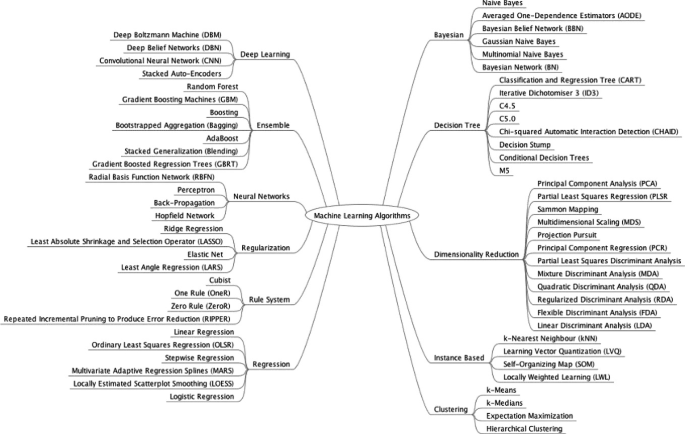

L2 regularization It is the most common form of regularization. Let us understand this concept in detail. Machine learning involves equipping computers to perform specific tasks without explicit instructions.

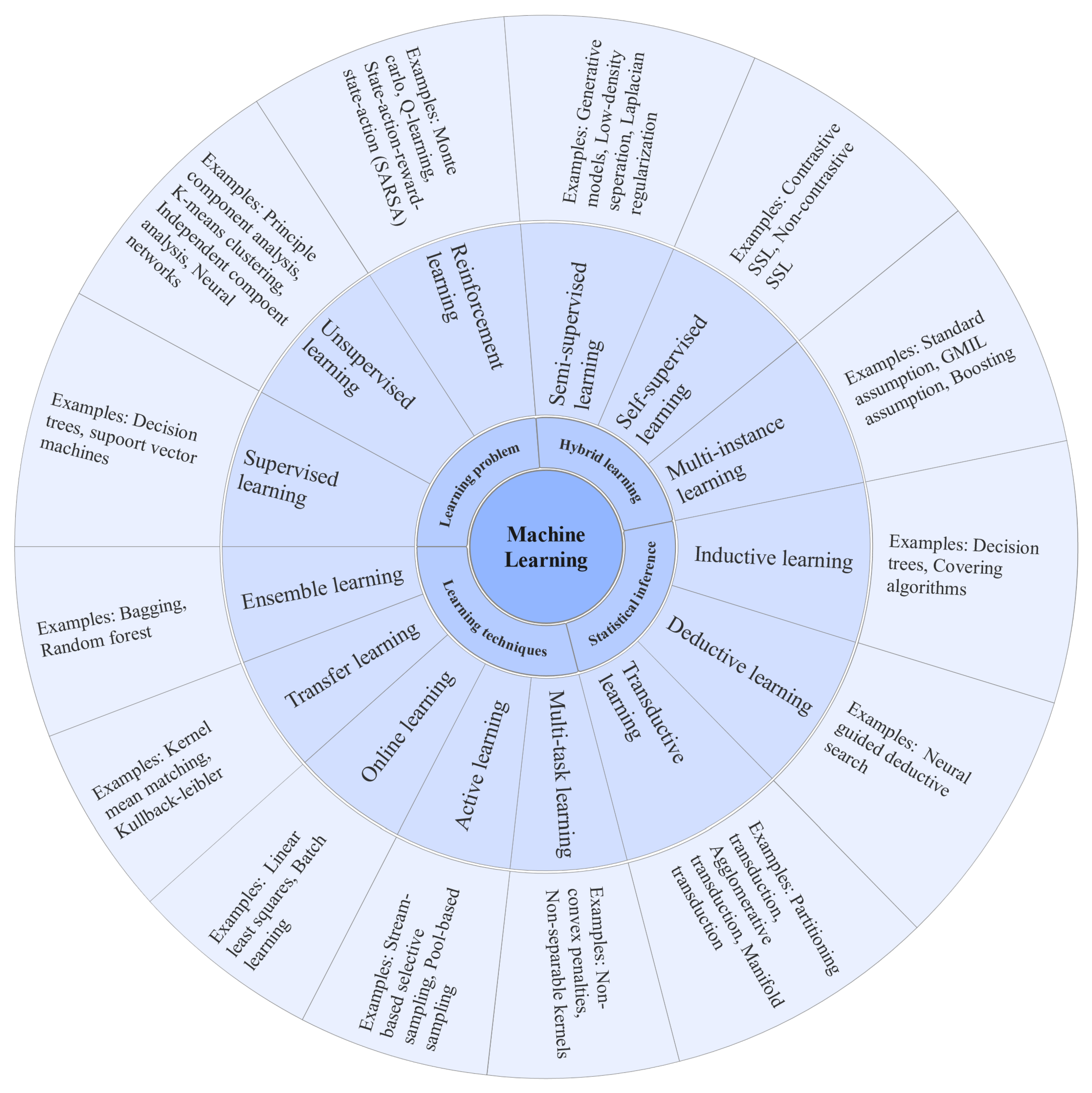

Types Of Machine Learning. Regularization in Machine Learning What is Regularization. 8 Linear Regression.

The answer is regularization. So the systems are programmed to learn and improve from experience automatically. In simple words regularization discourages learning a more complex or flexible model to.

Begin your Machine Learning journey here. Data scientists typically use regularization in machine learning to tune their models in the training process. Page 120 Deep Learning 2016.

The commonly used regularization techniques are. In the included regularization_ridgepy file the code that adds ridge regression is. Regularization Methods for Neural Networks.

It is a technique to prevent the model from overfitting by adding extra information to it. In Figure 4 the black line represents a model without Ridge regression applied and the red line represents a model with Ridge regression appliedNote how much smoother the red line is. Data augmentation and early stopping.

Regularization is used in machine learning as a solution to overfitting by reducing the variance of the ML model under consideration. Regularization is essential in machine and deep learning. One of the major aspects of training your machine learning model is avoiding overfitting.

The cheat sheet below summarizes different regularization methods. It will probably do a better job against future data. Applications of Machine Learning.

Adding the Ridge regression is as simple as adding an additional. Regularization is one of the central concerns of the field of machine learning rivaled in its importance only by optimization. Regularization can be splinted into two buckets.

Regularization is one of the most important concepts of machine learning. Sometimes the machine learning model performs well with the training data but does not perform well with the test data. What is Machine Learning.

Machine Learning Master.

Machine Learning Based Disease Diagnosis Encyclopedia Mdpi

![]()

Machine Learning Mastery Workshop Enthought Inc

Overview Of The Artificial Intelligence Methods And Analysis Of Their Application Potential Springerlink

Gentle Introduction To The Bias Variance Trade Off In Machine Learning

What Role Does Regularization Play In Developing A Machine Learning Model When Should Regularization Be Applied And When Is It Unnecessary Quora

Regularization In Machine Learning And Deep Learning By Amod Kolwalkar Analytics Vidhya Medium

Machine Learning Mastery With R Get Started Build Accurate Models And Work Through Projects Step By Step Pdf Machine Learning Cross Validation Statistics

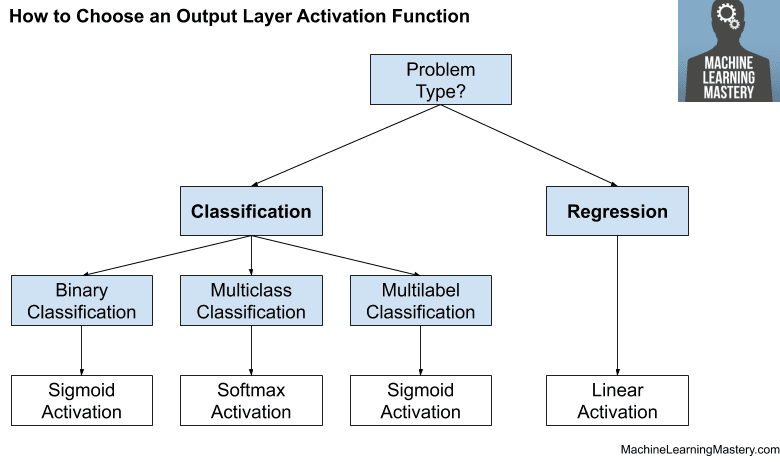

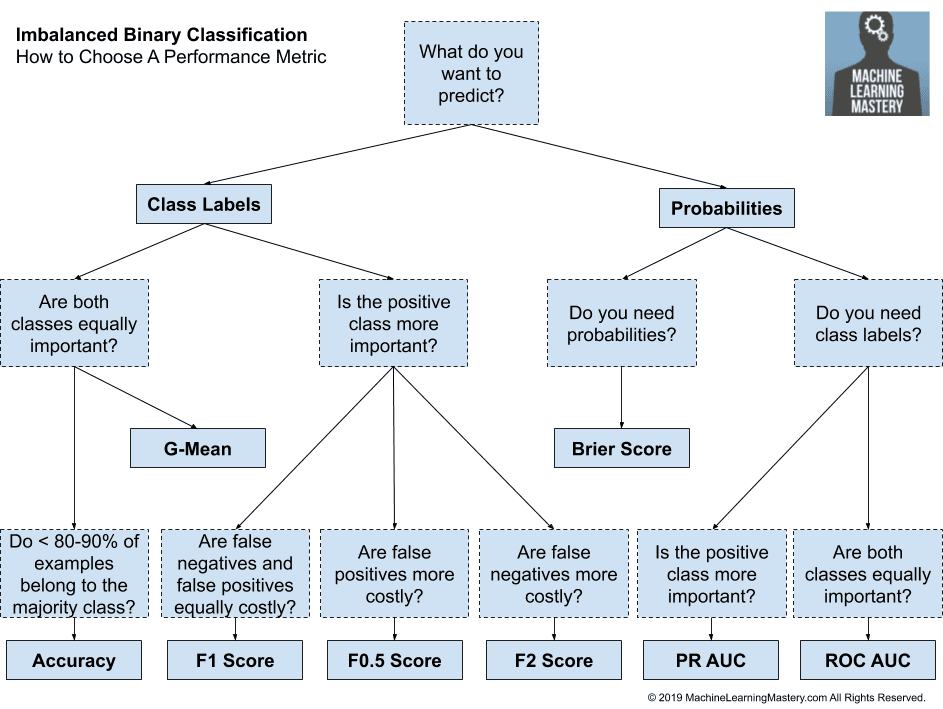

How To Choose An Evaluation Metric For Imbalanced Classifiers Data Visualization Class Labels Machine Learning

Machine Learning Mastery Jason Brownlee Machine Learning Mastery With Python 2016

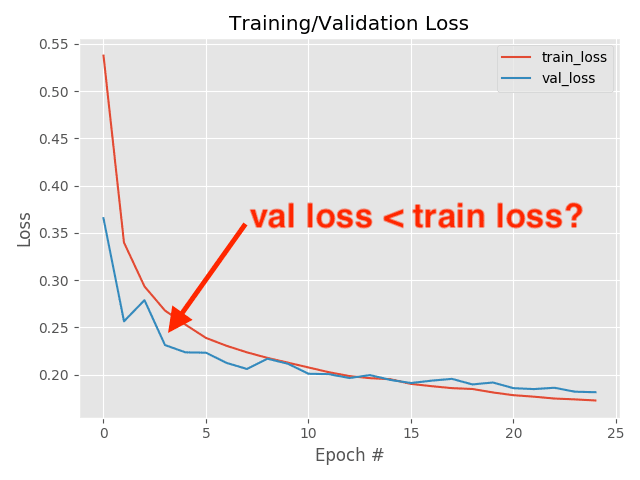

Why Is My Validation Loss Lower Than My Training Loss Pyimagesearch

A Gentle Introduction To The Rectified Linear Unit Relu

Machine Learning Keep Learning

What Is Regularization In Machine Learning

8 Tactics To Combat Imbalanced Classes In Your Machine Learning Dataset

![]()

Start Here With Machine Learning

Day 3 Overfitting Regularization Dropout Pretrained Models Word Embedding Deep Learning With R

Day 3 Overfitting Regularization Dropout Pretrained Models Word Embedding Deep Learning With R

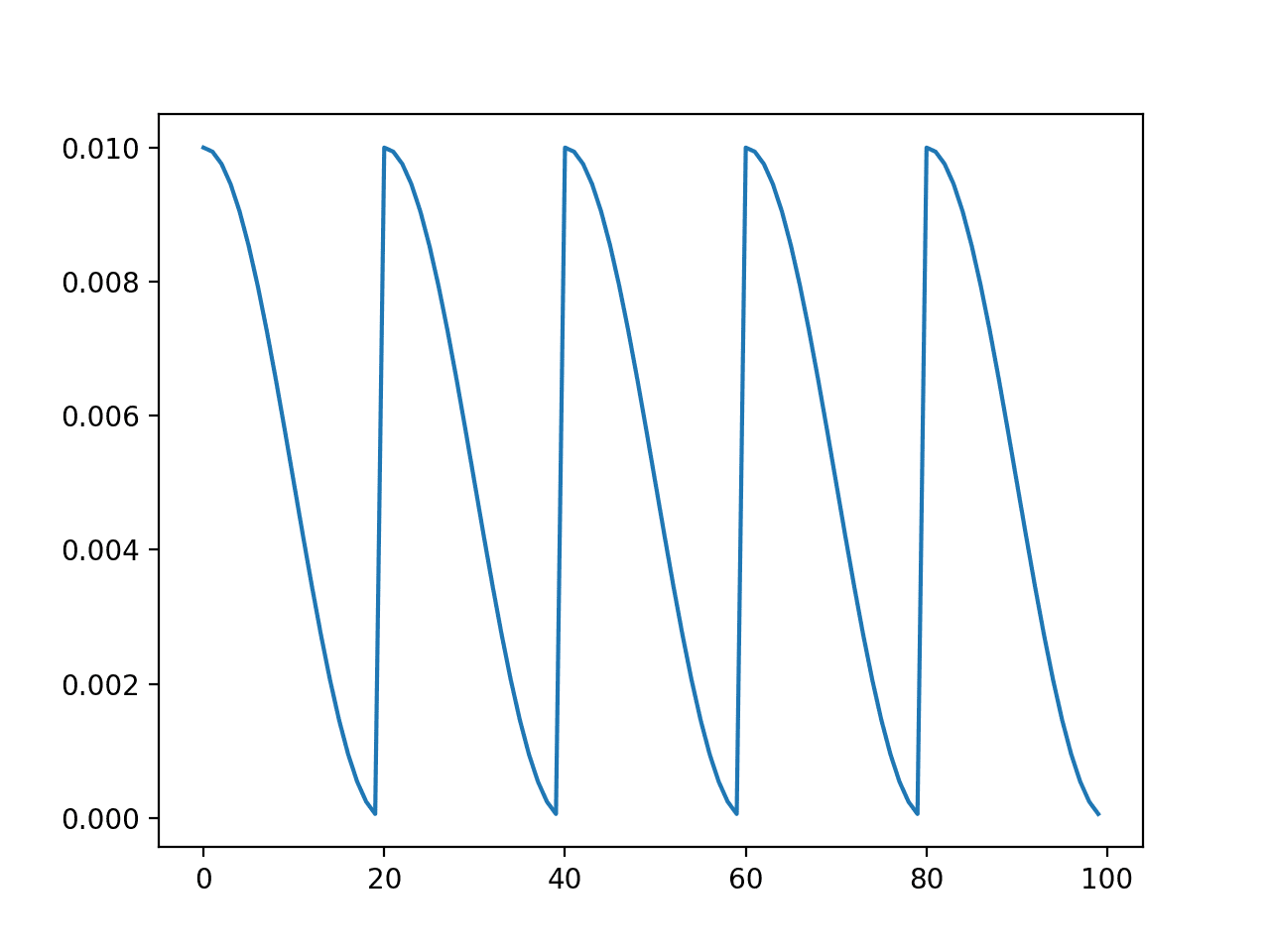

Understand The Impact Of Learning Rate On Neural Network Performance

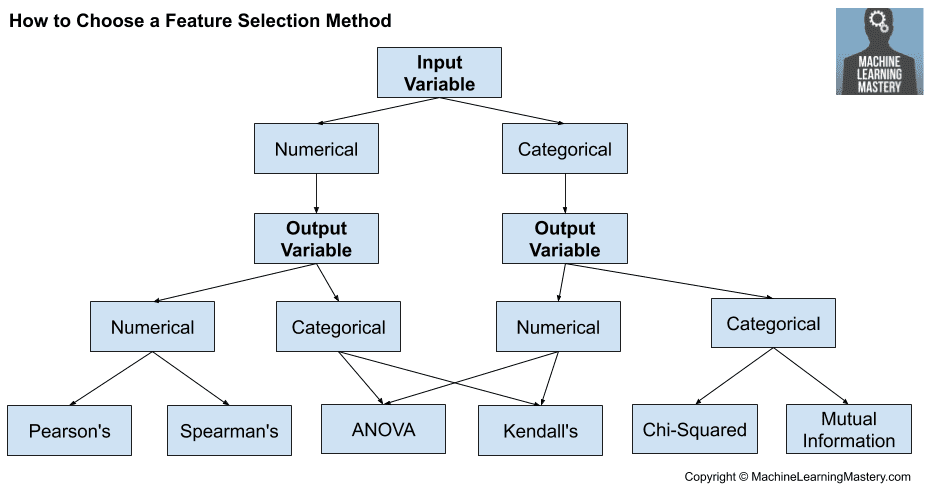

How To Choose A Feature Selection Method For Machine Learning